Navigating Batch Effects in Low-Yield RNA-seq: A Practical Guide for Robust Transcriptomic Analysis

Batch effects present a formidable challenge in low-yield RNA-seq data analysis, where limited starting material amplifies technical variation and threatens data reliability.

Navigating Batch Effects in Low-Yield RNA-seq: A Practical Guide for Robust Transcriptomic Analysis

Abstract

Batch effects present a formidable challenge in low-yield RNA-seq data analysis, where limited starting material amplifies technical variation and threatens data reliability. This article provides a comprehensive guide for researchers and drug development professionals, covering the foundational understanding of batch effects specific to low-input protocols, a survey of state-of-the-art correction methodologies, strategies for troubleshooting common pitfalls, and a framework for rigorous method validation. We synthesize recent advances, including the empirical-Bayes-based ComBat-ref and machine-learning-driven quality assessment, to offer actionable insights for preserving biological signal while removing unwanted technical noise, ultimately enhancing the reproducibility and power of transcriptomic studies.

Understanding the Batch Effect Challenge in Low-Yield RNA-seq Data

Technical Support Center

Troubleshooting Guides & FAQs

Q1: My PCA plot shows samples clustering strongly by sequencing date, not by treatment group. Is this a batch effect, and how can I confirm it? A: Yes, this pattern is highly suggestive of a major batch effect. To confirm:

- Statistical Test: Perform a PERMANOVA (Adonis) test using the

veganR package on your sample-to-sample distance matrix, withsequencing_dateas a factor. A significant p-value confirms the batch has a statistically measurable effect. - Surrogate Variable Analysis (SVA): Use the

svapackage to estimate surrogate variables of variation. If the first few surrogate variables correlate strongly with your technical batches, it confirms the effect. - Control Gene Inspection: Plot the expression of known housekeeping genes (e.g., ACTB, GAPDH) or exogenous spike-in controls across batches. Inconsistent expression indicates technical bias.

Q2: After batch correction (e.g., using ComBat), my biological signal seems attenuated. Did I over-correct? A: Over-correction is a common risk. Diagnose with these steps:

- Positive Control Check: Ensure known, strong differentially expressed genes (DEGs) from literature for your biological condition are still present and significant post-correction.

- Negative Control Check: The variance of negative control genes (e.g., genes not expected to change) or spike-ins should be reduced, but not driven to zero.

- Visual Inspection: Generate PCA plots colored by batch post-correction. Batches should be intermingled. Then, generate PCA plots colored by biological group. The biological separation should remain or improve. If biological groups are now completely overlapping, over-correction is likely.

Q3: In my low-yield RNA-seq experiment, I have batch effects confounded with my key biological condition. What correction strategies are viable? A: Confounded designs are challenging, especially with low-input data prone to increased technical noise.

- Prior to Correction: Use RNA-seq specific normalization methods (e.g.,

DESeq2's median of ratios,edgeR's TMM) that are robust to library size and composition differences typical in low-yield data. - Advanced Correction Methods:

- RUV (Remove Unwanted Variation): Ideal for confounded designs. It uses negative control genes (e.g., spike-ins, housekeeping genes, or empirically derived genes) to estimate and remove technical factors.

RUVseqis a key package. - Harmony or fastMNN: These integration algorithms can sometimes separate technical and biological sources when partially confounded, but require careful validation.

- RUV (Remove Unwanted Variation): Ideal for confounded designs. It uses negative control genes (e.g., spike-ins, housekeeping genes, or empirically derived genes) to estimate and remove technical factors.

- Critical: Do NOT include the confounded biological covariate in the batch correction model. Instead, validate findings with an orthogonal method (e.g., qPCR on key genes from independent samples).

Q4: What are the best practices for designing an experiment to minimize batch effects from the start, particularly with precious low-yield samples? A: Proactive design is crucial.

- Randomization & Blocking: Randomly assign samples from all biological groups to each processing batch (library prep, sequencing run). Never process all samples from one group in a single batch.

- Include Technical Replicates: If sample quantity allows, split a subset of samples to be processed in multiple batches to assess batch variability directly.

- Use Spike-in Controls: Add exogenous RNA controls (e.g., ERCC, SIRV) at the start of protocol. Their known ratios provide a ruler for technical noise.

- Balance & Replicate Batches: Ensure each batch contains a similar distribution of biological conditions and, if possible, replicate the entire experiment across independent batches.

Q5: How do I choose between parametric (ComBat) and non-parametric (limma removeBatchEffect) batch correction methods? A: The choice depends on your data structure and assumptions.

| Method (Package) | Type | Key Assumption | Best For | Risk with Low-Yield Data |

|---|---|---|---|---|

ComBat (sva) |

Parametric | Batch effect mean/variance is consistent across genes. | Larger sample sizes (>10 per batch), balanced designs. | Can be sensitive to outliers and violate distributional assumptions if noise is high. |

removeBatchEffect (limma) |

Non-parametric | Linear model can describe data. Makes no strict distributional assumption on the batch effect. | Smaller sample sizes, when you plan to follow with a linear modeling tool like limma. |

May be less efficient but more robust to non-normality. |

RUV (RUVseq) |

Factor-based | Technical variation can be captured via control genes/spike-ins. | Confounded designs, low-yield data with spike-ins. | Relies on quality of control genes; poor controls lead to poor correction. |

Experimental Protocols

Protocol 1: Batch Effect Diagnosis Using Surrogate Variable Analysis (SVA) Objective: To identify unknown sources of variation, including hidden batch effects, in a transcriptomics dataset.

- Load Data: Import your normalized count matrix and sample metadata into R.

- Define Models:

mod: The full model matrix including your biological variables of interest (e.g.,~ treatment_group).mod0: The null model matrix, typically containing only intercept or known technical covariates you wish to adjust for (e.g.,~ 1or~ known_covariate).

- Estimate Surrogate Variables: Use the

num.svfunction to estimate the number of surrogate variables (SVs). Then, apply thesvafunction to estimate the SVs themselves.

- Inspect SVs: Correlate the estimated SVs (

svobj$sv) with both technical (batch, RIN, date) and biological metadata. High correlation with technical factors confirms a batch effect.

Protocol 2: Batch Correction Using the RUV Method with Spike-in Controls Objective: To correct for batch effects in a confounded experimental design using exogenous spike-in RNAs.

- Data Preparation: Generate a count matrix where the last rows correspond to your spike-in control RNAs (e.g., ERCC). Create separate matrices:

counts_all(genes + spikes) andcounts_spikes(spikes only). - Define Negative Controls: The row indices or names corresponding to the spike-in controls are your "negative control" set, as they should not be influenced by biological state.

- Perform RUVg Correction (Using Controls):

- Use Corrected Data: The normalized counts for your endogenous genes are in

normCounts(set_ruv). The estimated batch factor (W_1) frompData(set_ruv)should be included as a covariate in downstream differential expression models.

Mandatory Visualizations

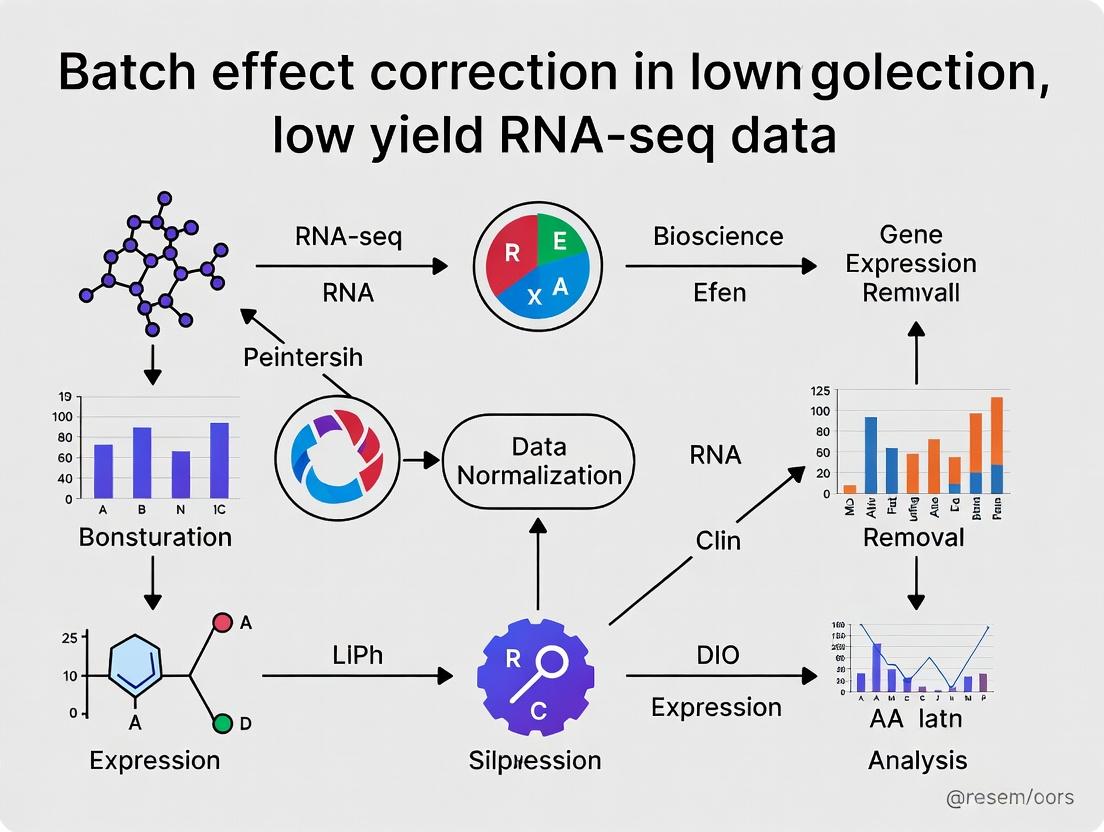

Title: The Origin and Correction of Batch Effects in RNA-Seq Workflow

Title: Decision Pathway for Choosing a Batch Correction Method

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Batch Effect Management |

|---|---|

| Exogenous Spike-in Controls (e.g., ERCC, SIRV) | Added at lysis. Provide an internal, absolute standard to track technical variation from start to finish, enabling robust normalization (e.g., for RUV). |

| Unique Molecular Identifiers (UMIs) | Incorporated into library prep protocols. Correct for PCR amplification bias and duplicate reads, a major technical noise source in low-input protocols. |

| Commercial Low-Input/Low-Quality RNA Library Prep Kits | Optimized chemistries (e.g., SMARTer, NuGEN) to minimize protocol-induced bias and improve reproducibility when sample input is limiting. |

| Automated Liquid Handling Systems | Reduce operator-induced variability in sample and reagent handling during library preparation across many samples/batches. |

| RNA Integrity Number (RIN) Equivalent Dyes | Accurately assess RNA quality of low-yield samples without traditional bioanalysis, a key covariate for downstream models. |

| Batch-Tracked Reagents | Using the same lot numbers of critical reagents (e.g., reverse transcriptase, ligase) across all samples minimizes lot-to-lot variability. |

Why Low-Yield and Challenging Samples Are Particularly Vulnerable to Batch Variation

Low-yield RNA-seq samples, such as those from rare cells, fine-needle aspirates, or archived tissues, exhibit heightened sensitivity to technical noise. The limited starting material necessitates amplification steps, making results susceptible to batch effects introduced by reagent lots, personnel, or instrument variations. This technical variation can obscure true biological signals, complicating data integration and interpretation. Our thesis research focuses on correcting these batch effects to recover reliable biological insights from such precious samples.

Troubleshooting Guides & FAQs

Q1: My low-input RNA-seq data shows high variability between replicates processed in different batches. How can I determine if it's a batch effect or biological variation? A: First, perform Principal Component Analysis (PCA). Batch effects often cause samples to cluster by processing date or batch, not by biological group. Use positive controls (e.g., external RNA controls consortium [ERCC] spike-ins) if included. High variance in spike-in expression across batches indicates technical batch variation.

Q2: During library preparation for single-cell/low-yield samples, I observe high duplication rates and low complexity libraries. What are the main causes? A: This typically stems from pre-amplification bias or degradation. Ensure RNA integrity (RIN > 7 for bulk, although often lower for challenging samples). Optimize the number of PCR cycles during library amplification—excessive cycles favor duplicate reads. Use unique molecular identifiers (UMIs) to distinguish technical duplicates from biological molecules.

Q3: After batch correction, my differential expression results seem overly attenuated. Could the correction be too aggressive?

A: Yes. Over-correction is a known risk, especially with low-yield data where batch can be confounded with condition. Apply correction methods (like ComBat-seq or Limma's removeBatchEffect) only to genes not expected to be differentially expressed (e.g., housekeeping genes), or use a method that models condition. Always validate with known positive/negative control genes.

Q4: What is the minimum recommended sequencing depth for low-yield samples to mitigate batch-related noise? A: While sample-dependent, a general guideline is provided below. Deeper sequencing helps statistically distinguish low-abundance transcripts from technical noise.

| Sample Type | Recommended Minimum Reads (Million) | Key Rationale |

|---|---|---|

| Standard Bulk RNA-seq | 20-30 M | Baseline for gene-level quantification. |

| Low-Yield Bulk (e.g., LCM, FFPE) | 40-60 M | Compensates for lower complexity and higher technical variance. |

| Single-Cell RNA-seq (per cell) | 50-100 K | Captures sparse transcriptome; batch effects are assessed across cells. |

Experimental Protocols

Protocol 1: ERCC Spike-In Normalization for Batch Assessment

Purpose: To quantify technical variation independent of biological content.

- Spike-In Addition: Add a defined volume of ERCC ExFold RNA Spike-In Mix (Thermo Fisher) to your low-yield lysate before any extraction or amplification steps. Use a dilution appropriate for your expected endogenous RNA amount.

- Proceed with Workflow: Continue with your standard low-input RNA-seq protocol (e.g., SMART-Seq).

- Analysis: Align reads to a combined reference (genome + ERCC sequences). Calculate the log2 counts per million (CPM) for each ERCC spike-in.

- Assessment: Plot the coefficient of variation (CV) of ERCC spikes across batches. High, consistent CV indicates batch-driven technical noise.

Protocol 2: UMI-Based Deduplication for Low-Input Libraries

Purpose: To accurately count original molecules and reduce PCR amplification bias.

- Library Prep: Use a UMI-equipped library preparation kit (e.g., from Takara Bio, NuGEN).

- Sequencing: Sequence the library, ensuring the UMI sequence is read (often in Read 1).

- Data Processing: Use a dedicated tool (e.g.,

umisorzUMIs). The tool will:- Extract UMIs and cell barcodes.

- Alocate reads to genes.

- Collapse reads with identical UMIs mapping to the same gene into a single count.

Protocol 3: Diagnostic PCA Pre- and Post-Batch Correction

Purpose: To visually assess batch effect and the efficacy of correction.

- Generate Count Matrix: Create a raw gene count matrix for all samples.

- Filter & Normalize: Filter low-expressed genes. Apply a variance-stabilizing transformation (e.g.,

vstin DESeq2) or generate log2(CPM) values. - Pre-Correction PCA: Perform PCA on the normalized data. Color points by

Batchand shape byCondition. - Apply Batch Correction: Apply a chosen method (e.g.,

ComBat_seqfrom thesvapackage in R) to the raw counts. Re-normalize the corrected counts. - Post-Correction PCA: Repeat PCA on the corrected, normalized data. Successful correction shows clustering by

Condition, notBatch.

Visualizations

Diagram 2: Batch Effect Correction & Validation Logic

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function & Relevance to Low-Yield/Batch Stability |

|---|---|

| ERCC Spike-In Mixes | Defined, exogenous RNA controls added prior to processing. Crucial for distinguishing technical batch variation from biological signal in precious samples. |

| UMI Adapters | Oligonucleotides containing random molecular barcodes. Tag each original molecule to correct for PCR duplication bias, improving quantification accuracy. |

| Single-Cell/Low-Input Kit | Optimized reagents (e.g., SMARTer kits) for cDNA synthesis and amplification from minimal RNA. Consistent lot-to-lot performance is critical. |

| RNA Integrity Number (RIN) | Bioanalyzer/TapeStation metric. While often lower for challenging samples (FFPE, LCM), it is a key covariate to include in batch effect models. |

| Homogenization Beads | Consistent bead type/size (e.g., zirconia-silica) ensures reproducible lysis efficiency, a major source of pre-analytical batch variation. |

| RNase Inhibitors | Essential for protecting already-low RNA amounts during sample handling and reverse transcription, reducing batch-failure risk. |

Technical Support Center

Welcome to the Batch Effect Troubleshooting Hub. This center provides targeted guidance for researchers encountering technical variability in low-yield RNA-seq experiments. All content is framed within the context of advancing robust batch effect correction methodologies.

Troubleshooting Guides & FAQs

Section 1: Sample Collection & Preparation

Q1: Our single-cell RNA-seq data shows clear clustering by isolation date, not cell type. What are the likely culprits?

- A: This is a classic sample preparation batch effect. Key sources include:

- Reagent Lot Variability: Different lots of collagenase, dissociation enzymes, or PBS can have varying efficiencies and cellular stress profiles.

- Ambient Temperature Fluctuations: Time from tissue resection to preservation impacts RNA integrity, especially critical for low-yield samples.

- Technician Protocol Drift: Minor differences in vortexing time, centrifugation speed, or pellet handling can introduce systematic noise.

- Protocol Mitigation: Implement a "Staggered Processing" design. If processing 24 samples over 4 days, process 6 samples each day, ensuring each experimental group is represented daily. This confounds day (batch) with group less severely.

- A: This is a classic sample preparation batch effect. Key sources include:

Q2: For low-input total RNA-seq, our Bioanalyzer profiles look good, but final library yields are highly variable. Why?

- A: Pre-amplification steps are major batch effect sources for low-yield material.

- cDNA Synthesis Kit Lot: Efficiency of reverse transcriptase directly correlates with final library complexity. Switching lots mid-study can cause a batch shift.

- PCR Amplification Cycle Number: Even a ±1 cycle difference can cause significant quantitative bias. Use the minimum necessary cycles and keep constant.

- Protocol Mitigation: For all samples in a study, use reagents from a single, unified lot number. Perform a pilot test to determine the exact minimum PCR cycles needed for your lowest-yield sample and apply that cycle number to all samples.

- A: Pre-amplification steps are major batch effect sources for low-yield material.

Section 2: Library Preparation & Sequencing

Q3: Our samples were sequenced across two different flow cell lanes. Now we see a lane-specific bias. What causes this?

- A: This is a sequencing-run batch effect. Primary factors are:

- Flow Cell Manufacturing Variance: Cluster density and phasing/pre-phasing rates differ between flow cells and lanes.

- Sequencing Chemistry Depletion: Reagent degradation over a run can cause declining quality scores and output for later-loaded lanes.

- Base Caller Software Updates: Changes in the algorithm between runs can alter read assignments.

- Protocol Mitigation: Employ "Interleaved Sequencing" across lanes. For example, if you have 8 samples and 2 lanes, load each sample equally across both lanes (e.g., 50% of each library in Lane 1, 50% in Lane 2). This distributes lane effects across all samples.

- A: This is a sequencing-run batch effect. Primary factors are:

Q4: We pooled libraries equimolarly based on Qubit, but sequencing depth varies drastically. How do we prevent this?

- A: Fluorometric assays (Qubit) measure total double-stranded DNA but do not reflect the fraction of library fragments containing valid adapters for cluster generation.

- Protocol Mitigation: Use qPCR-based quantification (e.g., Kapa Biosystems Library Quant kit) for final pool normalization, as it specifically quantifies amplifiable library fragments. Re-normalize pools after any size-selection or cleanup step.

Section 3: Data & Analysis

- Q5: After merging public dataset with our own, batch effects dominate the PCA. What's the first step to diagnose this?

- A: Create a "Batch-Parameter" Table to correlate technical variables with principal components. This identifies the most influential batch sources.

Table 1: Common Quantitative Sources of Batch Variability in RNA-seq

| Source | Metric Impacted | Typical Variation Range | Detection Method |

|---|---|---|---|

| RNA Integrity | 3'/5' Bias, Gene Body Coverage | RIN: 7.0 - 10.0 | Bioanalyzer, PCA on coverage |

| Library Concentration | Sequencing Depth | CV can be >30% with fluorometry only | Depth distribution plots |

| Cluster Density | % PF, Mismatch Rate | 180-220 K/mm² (Illumina NovaSeq) | Sequencing platform metrics |

| PCR Duplication Rate | Library Complexity | 10-50% for low-input protocols | Duplication metrics from aligners |

| Date of Processing | Global Expression Profiles | - | PCA colored by date |

Experimental Protocols for Batch Effect Assessment

Protocol 1: Spike-in Control Experiment to Distinguish Technical from Biological Variation

- Purpose: To quantify technical noise intrinsic to your low-yield RNA-seq workflow.

- Materials: External RNA Controls Consortium (ERCC) spike-in mixes.

- Method: a. Split a single, homogeneous low-yield biological sample into multiple aliquots (e.g., 6-12). b. Spike an identical amount of ERCC mix into each aliquot prior to cDNA synthesis. c. Process each aliquot independently through the entire library prep and sequencing pipeline, ideally across different days/operators. d. Sequence all libraries.

- Analysis: The variation in measured ERCC transcript counts across aliquots represents the non-biological, batch-specific technical variation of your entire workflow.

Protocol 2: Sample Replication Design for Batch Modeling

- Purpose: To generate data suitable for statistical batch correction algorithms (e.g., ComBat, limma).

- Method: a. Replicate Across Batches: Ensure each biological condition or cell type is represented in every batch (library prep day, sequencing run). b. Include Positive Controls: Process a reference standard sample (e.g., Universal Human Reference RNA) in every batch. c. Randomize: Randomize the processing order of samples within a batch to avoid confounding with time-of-day effects.

- Output: A data matrix where the

Batchcovariate is orthogonal to theConditioncovariate, enabling software to separate and remove batch-associated variance.

Visualizations

Title: Batch Effect Confounding in a Poor Experimental Design

Title: Balanced Design for Effective Batch Correction

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Low-Yield RNA-seq Batch Control

| Item | Function & Relevance to Batch Effects | Example Product(s) |

|---|---|---|

| ERCC Spike-in Mix | Artificial RNA molecules added to lysate. Allows absolute quantification and precise measurement of technical variation across batches. | Thermo Fisher Scientific ERCC Spike-In Mix |

| Universal Human Reference RNA | Complex, well-characterized RNA pool from multiple cell lines. Serves as a positive inter-batch control to monitor performance drift. | Agilent Technologies Universal Human Reference (UHR) RNA |

| qPCR-based Library Quant Kit | Accurately quantifies amplifiable library fragments via adaptor-specific primers. Critical for achieving equimolar pooling and avoiding depth batch effects. | Roche KAPA Library Quantification Kit |

| Single-Lot Reagent Bulk | Purchasing all critical enzymes (RT, PCR) and kits in a single lot for an entire study eliminates lot-to-lot variability, a major batch confounder. | Various (Plan procurement ahead) |

| Unique Dual Index (UDI) Kits | Index primers with unique dual combinations per sample. Eliminates index hopping crosstalk between samples pooled in the same batch. | Illumina TruSeq UD Indexes, IDT for Illumina UD Indexes |

| RNA Integrity Number (RIN) Standard | Provides a consistent metric for input quality, allowing samples below a threshold (e.g., RIN<7) to be flagged as potential outliers. | Agilent RNA 6000 Nano Kit |

Technical Support Center: Batch Effect Troubleshooting in Low-Yield RNA-seq

Troubleshooting Guides

Guide 1: Diagnosing Batch Effects in Your Data

- Symptom: PCA plot shows strong clustering by processing date, sequencing lane, or technician.

- Action: Perform a differential expression analysis using only batch information as the covariate. A significant number of false positives indicates a strong batch effect.

- Verification: Use the

svaseqorruvseqpackage to estimate surrogate variables of unwanted variation and visualize their correlation with experimental batches.

Guide 2: Protocol for Low-Yield Sample QC Prior to Library Prep

- Problem: RNA degradation or insufficient input leads to failed libraries and technical noise.

- Action Steps:

- Use a fluorometric assay (e.g., Qubit RNA HS Assay) for accurate low-concentration quantification.

- Check RNA Integrity Number (RIN) or DV200 (% of fragments >200nt) on a TapeStation or Bioanalyzer. For low-yield samples, DV200 is more reliable.

- If using a whole transcript amplification kit, perform technical replicates of the amplification reaction for critical low-input samples.

- Threshold: Proceed only if DV200 > 30% for FFPE or severely degraded samples; aim for RIN > 7 for intact samples.

Frequently Asked Questions (FAQs)

Q1: My negative controls (e.g., blank samples) are clustering with real samples in the PCA. What does this mean? A: This is a critical red flag. It indicates overwhelming technical noise or contamination that is stronger than your biological signal. You must:

- Identify and remove the source of contamination (reagents, cross-talk).

- Apply aggressive batch correction (e.g.,

ComBat-seqwith a prior defined by negative controls) or consider re-sequencing.

Q2: I applied ComBat, but my results still seem driven by batch. What went wrong? A: ComBat assumes the batch effect is orthogonal to the biological variable of interest. If they are confounded (e.g., all controls were processed in Batch A and all treatments in Batch B), correction will fail or remove biological signal. Solutions include:

- Experimental Design: Re-randomize samples across batches if possible.

- Statistical Approach: Use a model that includes both batch and condition as covariates (e.g., in

DESeq2orlimma), or use a method likeRUVseqwith empirical controls.

Q3: For single-cell or ultra-low-input RNA-seq, which batch correction method is most robust? A: No single method is universally best. The current (2023-2024) consensus is a tiered approach:

- Within-Platform: Use

harmony,Seurat's CCA, orscVIfor single-cell data. - Across-Platform/Technology: Use

limma'sremoveBatchEffectfor initial exploration, followed by careful validation using known marker genes. - Mandatory: Always validate correction by confirming that known biological replicates cluster together and that batch-specific marker genes are removed.

Table 1: Impact of Uncorrected Batch Effects on Differential Expression (DE) Analysis

| Metric | No Batch Effect | With Uncorrected Batch Effect | After Successful Correction |

|---|---|---|---|

| False Discovery Rate (FDR) | ~5% (as set) | Increased to 15-40% | Returns to ~5-10% |

| Number of DE Genes | 1,500 (True Positives) | Inflated to 3,000+ (Many False) | ~1,200-1,800 (Precise) |

| Reproducibility (Across Batches) | High (R > 0.95) | Very Low (R < 0.3) | Restored to Moderate-High (R > 0.8) |

Table 2: Performance of Common Batch Correction Tools on Low-Yield Data

| Tool/Method | Strength | Weakness for Low-Yield Data | Recommended Use Case |

|---|---|---|---|

| ComBat/ComBat-seq | Strong for known discrete batches. | Assumes balanced design; can over-correct with low N. | Known technical batches (date, lane) with >5 samples/batch. |

| sva (svaseq) | Models unknown/unexpected variation. | Unstable with very low sample numbers (n<10). | Complex studies with hidden covariates. |

| RUVseq | Uses control genes/RNAs for robust correction. | Requires reliable negative/positive controls. | Studies with spike-ins or housekeeping genes validated as stable. |

| limma removeBatchEffect | Simple, linear, preserves biological signal. | Does not integrate with downstream DE model. | Initial exploration and visualization before formal DE. |

Experimental Protocols

Protocol: Systematic Batch Effect Detection and Correction Workflow

Title: RNA-seq Batch Effect Detection & Correction Protocol

Reagents:

- RNeasy Micro Kit (Qiagen) or equivalent for low-yield extraction.

- SMART-Seq v4 Ultra Low Input RNA Kit (Takara Bio) for amplification.

- ERCC RNA Spike-In Mix (Thermo Fisher) for technical normalization control.

- KAPA Library Quantification Kit (Roche) for accurate library QC.

Methodology:

- Experimental Design:

- Randomize biological samples of different conditions across all processing batches (library prep days, sequencing lanes).

- Include at least one technical replicate (same biological sample processed in different batches) and one negative control per batch if possible.

- Wet-Lab Processing:

- For all samples below 100pg total RNA input, use a whole-transcriptome amplification kit.

- Add ERCC spike-ins at a consistent dilution before any amplification step.

- Pool libraries equimolarly based on qPCR quantification, not bioanalyzer concentration.

- Bioinformatic Analysis:

- Alignment & Quantification: Use

STAR+featureCountsorKallistofor pseudoalignment. - Initial QC: Generate PCA plot colored by

Condition,Batch,RIN, andSequencing Depth. - Batch Effect Estimation: Use the

pvcaR package to estimate the proportion of variance explained by batch vs. condition. - Correction:

a. If batches are known and discrete, apply

ComBat-seq(fromsvapackage). b. If hidden factors are suspected, usesvaseqto estimate surrogate variables and include them as covariates inDESeq2orlimma. - Validation: a. Re-plot PCA post-correction. Samples should cluster by condition. b. Correlation between technical replicates should improve. c. Negative controls should form a separate cluster or show minimal genes expressed.

- Alignment & Quantification: Use

Visualizations

Diagram 1: Batch Effect Troubleshooting Decision Tree

Diagram 2: Low-Yield RNA-seq Experimental Workflow with QC Checkpoints

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Reagents for Robust Low-Yield RNA-seq Studies

| Item | Function & Rationale | Example Product |

|---|---|---|

| High-Sensitivity RNA QC Assay | Accurately quantifies nanogram/picogram amounts of RNA. Prevents overestimation leading to failed libraries. | Qubit RNA HS Assay, TapeStation HS RNA ScreenTape |

| Whole-Transcriptome Amplification Kit | Generates sufficient cDNA from ultra-low-input or degraded RNA for standard library prep. | SMART-Seq v4 Ultra Low Input Kit, NuGEN Ovation RNA-Seq System V2 |

| External RNA Controls (Spike-Ins) | Inert, synthetic RNAs added at known concentration. Critical for distinguishing technical noise from biological signal and assessing batch effects. | ERCC ExFold RNA Spike-In Mix |

| Unique Molecular Identifiers (UMIs) | Molecular barcodes that label each original molecule, allowing correction for PCR amplification bias and providing absolute molecule counts. | Duplex-Specific Nuclease (DSN) kits with UMI adapters |

| Batch-Tested Library Prep Reagents | Reagents from a single, large manufacturing lot reduce variability. Essential for multi-batch studies. | Request "lot-matched" kits from suppliers (e.g., Illumina, NEB) |

| qPCR-Based Library Quant Kit | Fluorometric methods (Bioanalyzer) are inaccurate for diverse library sizes. qPCR quantifies only amplifiable fragments, ensuring balanced pooling. | KAPA Library Quantification Kit, NEBNext Library Quant Kit |

Troubleshooting Guides & FAQs

Q1: After running PCA on our low-yield RNA-seq dataset, the samples cluster strongly by processing date rather than biological group. What does this indicate and what are the first steps? A: This is a classic sign of severe batch confounding. The variance from non-biological technical factors (date, operator, reagent lot) is dominating the signal. First steps: 1) Document all meta-data (sample prep date, sequencing lane, kit lot number) in a structured table. 2) Verify the finding by coloring the same PCA plot by other known technical factors. 3) Before any correction, assess whether the batch effect is balanced across groups (e.g., are all cases processed on one day and all controls on another?). An unbalanced design severely complicates correction.

Q2: When using k-means clustering to detect latent batch effects, how do we choose the number of clusters (k)?

A: For batch detection, choose k based on the number of suspected technical groups (e.g., number of sequencing runs). Alternatively, use the Elbow Method on the within-cluster sum of squares plot or a silhouette analysis. A key diagnostic is to cross-tabulate the resulting clusters against known technical and biological variables. A high association between a cluster and a technical factor signals batch confounding.

Q3: Our hierarchical clustering dendrogram shows a primary split that aligns with two different RNA extraction kits. Can we still proceed with differential expression analysis?

A: Proceeding with naive DE analysis is highly discouraged. The kit effect is likely introducing false positives or obscuring true signals. You must apply a batch effect correction method (e.g., ComBat, limma's removeBatchEffect, or a model including 'kit' as a covariate). However, if the kit use is perfectly confounded with your biological condition (e.g., all diseased samples used Kit A), correction is statistically impossible, and the experiment may need to be re-done.

Q4: In our PCA, PCI seems to be driven by a batch effect, but it also explains a very high percentage of variance (>50%). Does removing it risk losing biological signal? A: Yes, aggressive removal of high-variance components can remove biological signal. Do not simply discard PCI. Instead, use a supervised approach: 1) Apply a batch correction algorithm designed to protect biological variance. 2) After correction, re-run PCA to confirm the batch-driven separation is reduced while biological separation is maintained. 3) Validate findings with RT-qPCR on key genes from independent samples.

Q5: We suspect a "hidden" batch effect not recorded in our metadata. How can clustering help identify it? A: Perform unsupervised clustering (e.g., hierarchical, k-means) on the normalized expression matrix. Examine the resulting sample clusters or dendrogram branches for strong cohesion. Then, statistically test (using chi-square or Fisher's exact tests) the association between these data-driven clusters and every available piece of metadata (down to the day of the week). A significant association with an unrecorded factor (like a specific lab technician's shift) can uncover the hidden batch.

Key Experimental Protocol: PCA & Clustering for Batch Effect Detection

- Data Preparation: Start with normalized count data (e.g., TPM, FPKM from low-yield protocols, or corrected counts from DESeq2/edgeR). Log2-transform the data (usually

log2(x + 1)). - PCA Execution: Perform PCA on the transposed matrix (samples x genes) using centered (and often scaled) data. Extract principal components (PCs) and their explained variance.

- Visual Diagnostics: Create scatter plots of PC1 vs. PC2, PC1 vs. PC3, etc. Color points by known biological factors (e.g., disease state) and separately by technical factors (batch, date). Look for clustering/separations driven by technical factors.

- Clustering Analysis: Perform hierarchical clustering with a distance metric (e.g., 1 - correlation) and ward.D linkage on the sample-wise correlation matrix. Plot the dendrogram and color the sample labels by biological and technical groups.

- Statistical Validation: For k-means clustering, create a contingency table comparing cluster assignment to batch/biological group. Apply a chi-squared test to quantify the association strength.

- Reporting: Document the proportion of variance explained by batch-associated PCs and the statistical significance of cluster-batch associations.

Table 1: Common PCA Outcomes and Interpretations in Low-Yield RNA-seq

| PCA Observation | Probable Cause | Recommended Action |

|---|---|---|

| Strong separation by processing date/run on PCI (>40% variance) | High-impact batch effect | Apply batch correction (ComBat-seq, limma); redesign experiment if confounded. |

| Biological group separation only visible on PC3 or later | Moderate batch effect masking biology | Use correction; include batch as covariate in downstream models. |

| No clear clustering by any known factor | Minimal batch effect or failed experiment | Verify RNA quality and library prep; proceed with biological analysis cautiously. |

| Single sample outlier in PCA space | Possible sample contamination or technical failure | Inspect QC metrics for that sample; consider removal if justified. |

Table 2: Comparison of Clustering Methods for Batch Detection

| Method | Key Parameter | Strength for Batch Detection | Limitation |

|---|---|---|---|

| Hierarchical Clustering | Linkage method, distance metric | Visual dendrogram clearly shows sample relationships and major splits. | Less quantitative; interpretation can be subjective. |

| K-means Clustering | Number of clusters (k) | Provides clear cluster assignments for statistical testing against metadata. | Requires pre-specification of k; assumes spherical clusters. |

| Principal Component Analysis | Number of PCs to retain | Directly visualizes largest sources of variance; variance quantifiable. | Linear method; may miss complex nonlinear batch effects. |

| UMAP/t-SNE | Perplexity, neighbors | Can reveal subtle, nonlinear sample groupings. | Results are stochastic; distances not preservable; prone to artifacts. |

Diagrams

Title: Workflow for Visual Diagnostics of Batch Effects

Title: Interpreting Batch Effects in PCA Plots

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Low-Yield RNA-seq Batch Diagnostics |

|---|---|

| ERCC RNA Spike-In Mix | Exogenous controls added before library prep to monitor technical variation and normalization efficacy across batches. |

| UMI Adapters (Unique Molecular Identifiers) | Barcodes individual RNA molecules to correct for PCR amplification bias and improve quantification accuracy in low-input samples. |

| Robust Normalization Software (e.g., DESeq2, edgeR) | Statistical packages that use advanced models (e.g., negative binomial) to normalize counts and are foundational for pre-correction PCA. |

| Batch Correction Algorithms (ComBat-seq, svaseq, RUVseq) | Tools specifically designed to estimate and remove unwanted technical variance while preserving biological signal. |

| High-Sensitivity DNA/RNA Kits (e.g., Bioanalyzer) | For accurate quality assessment of limited material, a critical QC step before sequencing to prevent batch failures. |

| Sample Multiplexing Indexes (e.g., from Illumina) | Allows pooling of samples from different conditions across multiple lanes/runs to balance experimental design and mitigate batch effects. |

Methodologies for Correcting Batch Effects in RNA-seq Count Data

Technical Support Center: Troubleshooting Batch Effects in Low-Yield RNA-Seq

FAQs & Troubleshooting Guides

Q1: After applying ComBat, my low-yield sample clusters are still distinct from the high-yield batch. What went wrong? A: This is common when batch effect is confounded with biological condition, or when the low-yield batch has excessive technical noise that violates ComBat's assumption of identical mean-variance relationships.

- Troubleshooting Steps:

- Diagnose: Use PCA colored by batch and condition separately. If they are aligned, correction may remove biological signal.

- Solution A: Use a method like

limma::removeBatchEffectwith the model~ Condition + Batch, which preserves the condition of interest while adjusting for batch. This is a covariate adjustment approach. - Solution B: For severe noise in low-yield data, consider a two-step approach: first, use a variance-stabilizing transformation (e.g., DESeq2's

vst), then apply a non-parametric correction like Mutual Nearest Neighbors (MNN) viabatchelor::fastMNN, which is robust to distributional differences.

Q2: My negative control samples are not co-clustering after correction, suggesting residual batch effects. How can I quantify this? A: Use a quantitative metric to assess correction performance before analyzing experimental samples.

- Protocol: Calculate the Percent Variance Explained by Batch.

- For your normalized count matrix, perform a PCA.

- Extract the proportion of variance (PC1, PC2) attributed to the "Batch" factor using ANOVA.

- Apply your chosen correction paradigm.

- Re-calculate the PCA on the corrected matrix and again quantify variance explained by Batch.

- Compare pre- and post-correction values. A successful correction drastically reduces the batch-associated variance. See Table 1.

Table 1: Example Batch Variance Metric Before/After Correction

| Correction Paradigm | % Variance (PC1) Explained by Batch | % Variance (PC2) Explained by Batch |

|---|---|---|

| Uncorrected Data | 65% | 22% |

Linear Covariate Adjustment (limma) |

12% | 8% |

| Empirical Bayes (ComBat) | 5% | 3% |

Data Transformation + MNN (batchelor) |

3% | 2% |

Q3: Which correction method should I start with for my pilot low-yield RNA-seq study? A: Follow this diagnostic decision workflow.

Q4: After aggressive correction, my differential expression results show very few significant genes. Did I over-correct? A: Likely yes. Over-correction removes biological signal along with batch noise.

- Validation Protocol: Spike-in Control Analysis.

- If you used ERCC or other spike-in controls, isolate their counts.

- Plot the log2 fold change of spike-ins between conditions within the same batch. They should center around zero (no biological change expected).

- Plot the log2 fold change of spike-ins between batches for the same condition. This measures residual batch effect.

- If both plots show a tight distribution around zero, correction is appropriate. If the within-condition, between-batch spread is wide, correction was insufficient. If the within-batch, between-condition spread is wide, over-correction has occurred, introducing artificial differences.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Low-Yield RNA-Seq Batch Effect Research

| Item | Function in Context |

|---|---|

| ERCC RNA Spike-In Mix | Artificial RNA molecules added at known concentrations to every sample. Serves as an internal standard to differentiate technical batch effects from true biological variation. |

| UMI (Unique Molecular Identifier) Adapters | Oligonucleotide tags that label each original molecule with a unique barcode. Critical for low-yield protocols to correct for PCR amplification bias and enable accurate digital counting, reducing noise that confounds batch correction. |

| Commercial Low-Input Library Prep Kits (e.g., SMART-Seq) | Standardized, optimized protocols for amplifying picogram quantities of RNA. Using the same kit across all batches minimizes protocol-induced batch effects. |

| Inter-Batch Control Reference RNA (e.g., Universal Human Reference RNA) | A well-characterized RNA pool aliquoted and included in every sequencing batch. Provides a stable biological baseline to align batches to. |

| Batch-Aware Analysis Software (R/Python) | sva (ComBat), limma, batchelor, DESeq2. Toolkits implementing the statistical paradigms for correction. |

Q5: How do I implement the Mutual Nearest Neighbors (MNN) correction protocol?

A: Here is a step-by-step workflow using the batchelor package in R, suitable for low-yield data.

Detailed MNN Protocol:

- Normalization: Generate a log-transformed normalized count matrix (e.g., log2-CPM) for each batch separately. Do not globally normalize across batches initially.

- HVG Selection: For each batch, calculate the variance of expression for each gene. Take the union of the top 2000-5000 variable genes across all batches. This focuses correction on informative genes.

- Run Correction: Execute

corrected <- fastMNN(batch1, batch2, batch3, d=50, subset.row=hvg.list). Thedparameter is the number of principal components to use for correction; start with 50. - Output: The

correctedobject contains areconstructedmatrix in log-expression space. Usecorrected$reconstructedfor PCA and clustering. - DE Analysis: For differential expression, it is recommended to use the batch-corrected values as covariates in a linear model (e.g., in

limma) rather than testing directly on them, to preserve uncertainty estimates.

Technical Support Center

Troubleshooting Guides & FAQs

Q1: After applying ComBat to my low-yield RNA-seq dataset, the batch-corrected expression matrix contains negative values. Is this an error? How should I proceed with downstream analysis? A: This is an expected behavior, not an error. ComBat's location and scale adjustment can shift expression values, potentially resulting in negatives, especially for lowly expressed genes in low-yield data.

- Troubleshooting Steps:

- Verify Transformation: Ensure your input data was log-transformed (e.g., log2(CPM+1)) before ComBat. The Empirical Bayes framework in ComBat assumes an approximately normal distribution.

- Downstream Compatibility: For analyses requiring positive values (e.g., certain pathway analysis tools), consider:

- Adding a small constant to the entire matrix to make the minimum value zero or positive.

- Using the

prior.plots=TRUEargument in thesva::ComBat()function to check if the Empirical Bayes priors were appropriately estimated.

- Model Check: Re-examine your model matrix. Ensure batch is correctly specified and no other variables (e.g., condition of interest) are accidentally included in the batch correction model.

Q2: When running ComBat, I receive a convergence warning: "Algorithm did not converge." What does this mean for my low-yield data correction, and how can I resolve it? A: This warning indicates the iterative Empirical Bayes algorithm did not reach its convergence threshold within the default maximum iterations. This is more common with small sample sizes or low-yield data where parameter estimation is challenging.

- Resolution Protocol:

- Increase the maximum iterations using the

maxitparameter (e.g.,ComBat(dat, batch, maxit=1000)). - If the warning persists, visualize the data with

prior.plots=TRUE. If the empirical (data) and parametric (prior) distributions are severely mismatched, the model assumptions may be strained. - As an alternative, consider using the

mean.only=TRUEoption if you suspect scale differences (variance) between batches are minimal in your low-yield experiment.

- Increase the maximum iterations using the

Q3: How do I decide whether to use the "parametric" or "non-parametric" Empirical Bayes option in ComBat for my dataset? A: The choice relates to the robustness of the prior distribution estimation.

- Parametric (

par.prior=TRUE): Assumes the batch effect parameters (additive and multiplicative) follow a normal and inverse gamma distribution, respectively. It is computationally faster and recommended for datasets with >10 samples per batch. - Non-parametric (

par.prior=FALSE): Estimates the priors empirically without assuming a specific distribution shape. Use this for very small batch sizes (e.g., <10 samples per batch), which is typical in low-yield RNA-seq studies. - Recommendation for Low-Yield Data: Start with the non-parametric approach. If you have many batches with very few samples, also investigate the

ref.batchoption in newer ComBat versions to pool information more effectively.

Q4: After successful batch correction, how should I validate the effectiveness of ComBat on my low-yield RNA-seq data? A: Validation is a critical step.

- Experimental Protocol for Validation:

- Principal Component Analysis (PCA): Generate PCA plots colored by batch before and after correction. Successful correction should show batch clusters merging.

- PCA colored by Condition: Ensure biological conditions of interest remain separated after correction.

- Quantitative Metrics: Calculate the following metrics per gene before and after correction. Summarize the results:

| Metric | Formula / Description | Interpretation for Successful Correction |

|---|---|---|

| Percent Variance Explained by Batch | R² from ANOVA (gene ~ batch) | Should be significantly reduced post-ComBat. |

| Median Absolute Deviation (MAD) Ratio | MAD(Residuals from gene ~ condition) / MAD(Residuals from gene ~ batch + condition) | A value closer to 1 indicates batch effect removal without removing biological signal. |

| Distance Metric (e.g., 1 - Pearson correlation) | Average within-batch vs. between-batch sample distance. | Within- and between-batch distances should become more similar post-correction. |

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Batch Effect Correction for Low-Yield RNA-seq |

|---|---|

R/Bioconductor sva Package |

Contains the standard ComBat function for Empirical Bayes batch correction. Essential for implementation. |

bladderbatch / GSE5859 Dataset |

Classic, publicly available benchmark datasets with known batch effects. Used for method validation and training. |

| UMI-based RNA-seq Library Prep Kits (e.g., SMART-Seq2 with UMIs) | Reduces technical noise and PCR duplicates at the source, mitigating batch effects from amplification for low-input samples. |

| External RNA Controls Consortium (ERCC) Spike-Ins | Synthetic RNAs added to lysate before library prep. Monitor technical variation and can inform batch correction models. |

R ggplot2 & pheatmap Packages |

Critical for creating pre- and post-correction diagnostic plots (PCA, boxplots, heatmaps) for visual validation. |

| Inter-Batch Pooled Reference Sample | A control sample split across all batches during library prep and sequencing. Provides a direct anchor for batch alignment methods. |

| Harmony or Seurat Integration | For single-cell or very complex batch structures, these alternative integration methods may complement ComBat's approach. |

Experimental Protocol: Key Method for Evaluating ComBat on Low-Yield Data

Title: Protocol for Benchmarking ComBat Correction Using a Diluted RNA Sample Series.

Objective: To empirically assess ComBat's performance in correcting batch effects introduced across separate sequencing runs using samples of varying, low input RNA amounts.

Materials: High-quality reference RNA (e.g., Universal Human Reference RNA), RNA extraction kit, RNA dilution buffer, UMI-based low-input RNA-seq library prep kit, sequencer.

Methodology:

- Sample Preparation:

- Serially dilute the reference RNA to create a series (e.g., 10ng, 1ng, 0.1ng, 0.01ng) in triplicate.

- Library Preparation & Sequencing (Introducing Batch):

- Divide all samples (12 total) into two "batch" groups (Batch A and Batch B), each containing two replicates of each dilution level. Prepare libraries in separate weeks using the same protocol and reagents.

- Sequence all libraries, but on different flow cells or sequencer runs to introduce technical batch variation.

- Data Processing:

- Align reads, generate a raw count matrix, and normalize to CPM. Apply a log2(CPM+1) transformation.

- Batch Correction & Analysis:

- Apply ComBat (

sva::ComBat(dat=logCPM_matrix, batch=experiment_batch_vector)) using the non-parametric prior option. - Perform PCA on the pre- and post-correction data.

- For each dilution level, calculate the average within-dilution correlation (biological signal) and within-batch correlation (batch artifact) before and after correction.

- Apply ComBat (

Visualization: ComBat Empirical Bayes Workflow

Diagram Title: ComBat Empirical Bayes Batch Correction Algorithm Flow

Diagram Title: Low-Yield RNA-seq Batch Correction Validation Strategy

Troubleshooting Guides & FAQs

Q1: The corrected data shows increased variance for low-count genes after running ComBat-ref. What went wrong?

A: This is often caused by the statistical properties of the Minimum-Dispersion Reference (MDR) batch selection. When the chosen MDR batch has an exceptionally low dispersion for a specific gene, the scaling factor applied to other batches can be too aggressive. Solution: Re-run the algorithm with the mean.only=TRUE parameter or apply a gentle variance-stabilizing transformation (e.g., log2(count+1)) to the raw counts before ComBat-ref, especially for datasets with many zero or near-zero counts.

Q2: How do I choose the reference batch if my data has more than 5 batches? Does ComBat-ref automate this? A: ComBat-ref explicitly automates the selection of the Minimum-Dispersion Reference (MDR) batch. It calculates the gene-wise dispersion (variance-to-mean ratio) across all samples within each batch, then selects the batch with the median dispersion value across all genes as the reference. You should not manually select it. The algorithm is designed to choose the most technically stable batch as the anchor.

Q3: My PCA plot shows batch mixing, but I suspect biological signal has been removed. How can I diagnose this? A: This is a critical issue. Follow this diagnostic protocol: 1. Identify a set of positive control genes (e.g., housekeeping genes or genes known to be differentially expressed between your biological conditions from prior literature). 2. Create a table of their variance before and after correction within the same biological condition but across batches. 3. Run a differential expression analysis (e.g., DESeq2, edgeR) on the uncorrected and corrected data separately. 4. Compare the lists of significant genes. A drastic reduction in the number of significant genes for your primary biological variable suggests over-correction.

Table 1: Diagnostic Metrics for Over-Correction

| Metric | Uncorrected Data | ComBat-ref Corrected Data | Expected Outcome |

|---|---|---|---|

| Mean Variance of Housekeeping Genes | High across batches | Low across batches | Decrease |

| No. of Significant DE Genes (p<0.01) | e.g., 1250 | e.g., 200 | Slight decrease acceptable, drastic drop is not |

| PC1 Correlation with Batch | >0.8 | <0.3 | Decrease |

| PC1 Correlation with Biology | >0.7 | >0.6 | Should remain high |

Q4: I get an error about "negative counts" when applying ComBat-ref to my raw integer count matrix. How do I resolve this?

A: ComBat-ref, like its predecessor, operates on a continuous (log-transformed) scale. You cannot input raw integer counts. You must first transform your count data. We recommend using the vst (variance stabilizing transformation) function from DESeq2 or the voom function from limma-voom. This transforms the counts to a continuous, approximately homoscedastic scale suitable for ComBat-ref's linear model adjustment.

Q5: Does ComBat-ref handle zero-inflated data from low-yield RNA-seq experiments? A: ComBat-ref is more robust than standard ComBat for low-yield data because the MDR batch is less likely to be an outlier batch with high technical noise. However, extreme zero inflation (>90% zeros per gene) remains challenging. Recommendation: Prior to correction, filter out genes with zero counts across a large percentage of samples (e.g., >80%). Perform correction on the remaining genes.

Experimental Protocol: Validating ComBat-ref Performance

This protocol is for benchmarking ComBat-ref against other methods in the context of a thesis on low-yield data.

1. Data Simulation with Known Batch Effects:

- Method: Use the

splatterR package to simulate low-yield RNA-seq count data. Set parameters:batch.facLoc = 0.5,batch.facScale = 0.5for moderate batch effect. For low-yield condition, setlib.locto a low value (e.g.,log(500,000)) and increasedropout.midparameter to induce zero inflation. - Output: A count matrix with known biological groups, known batch labels, and known differentially expressed (DE) genes.

2. Batch Effect Correction Application:

- Input: Simulated raw count matrix.

- Steps:

a. Apply variance stabilizing transformation (

DESeq2::vst). b. Apply three correction methods: 1) Standard ComBat, 2) ComBat-ref (MDR), 3) limma'sremoveBatchEffect. c. Run Principal Component Analysis (PCA) on each corrected dataset.

3. Performance Quantification:

- Metric 1 - Batch Mixing: Calculate the Adjusted Rand Index (ARI) between batch labels and k-means clusters (k=number of batches) on PC1 and PC2. Lower ARI indicates better batch mixing.

- Metric 2 - Signal Preservation: Perform DE analysis (e.g., using

limmaon corrected data) to recover the simulated DE genes. Calculate the F1-score (harmonic mean of precision and recall).

Table 2: Example Performance Comparison (Simulated Data)

| Correction Method | ARI (Batch Mixing) ↓ | F1-Score (DE Recovery) ↑ | Computation Time (s) |

|---|---|---|---|

| Uncorrected | 0.85 | 0.55 | N/A |

removeBatchEffect |

0.25 | 0.78 | 2.1 |

| Standard ComBat | 0.15 | 0.82 | 5.5 |

| ComBat-ref (MDR) | 0.10 | 0.88 | 6.8 |

Visual Workflows

Diagram 1: ComBat-ref Core Algorithm Workflow

Diagram 2: Thesis Validation Protocol for Batch Effect Correction

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Implementing & Validating ComBat-ref

| Item / Reagent | Function in ComBat-ref Context | Example / Note |

|---|---|---|

| R Statistical Environment (v4.2+) | Primary platform for running the correction algorithm. | Required base installation. |

sva R Package (v3.46.0+) |

Contains the ComBat_ref function. |

Must be installed from Bioconductor. |

DESeq2 or limma-voom |

Provides the essential Variance-Stabilizing Transformation (VST) for preparing count data. | DESeq2::vst() is the standard pre-processing step. |

splatter R Package |

Simulates realistic RNA-seq data with tunable batch effects and low-yield parameters for method validation. | Critical for thesis benchmarking experiments. |

| Positive Control Gene Set | A curated list of stable (housekeeping) and known differentially expressed genes to monitor over-correction. | e.g., GAPDH, ACTB; or genes from prior pilot study. |

| High-Performance Computing (HPC) Cluster or RStudio Server | Enables correction of large datasets (>1000 samples) and parallel simulation studies. | ComBat-ref is computationally efficient but HPC aids in validation scaling. |

Technical Support Center

Troubleshooting Guides & FAQs

Q1: After running the automated quality score (AQS) algorithm, all my samples from Batch 2 receive a consistently low score, but the raw PCA looks fine. What could be the cause?

A: This often indicates a technical artifact not captured by principal variance. First, verify the negative control genes you supplied. If the control list contains genes with true biological signal in your experiment, the AQS will be miscalibrated. Action: Re-run the AQS using a verified "housekeeping" list from a database like EBI Expression Atlas for your specific tissue/cell type. Second, check for library size outliers in Batch 2; severe imbalances can skew the score. Normalize read counts using the Trimmed Mean of M-values (TMM) method before AQS calculation.

Q2: My batch correction using ComBat-seq, triggered by a poor AQS, resulted in over-correction and the loss of a key biological signal. How can I troubleshoot this?

A: Over-correction occurs when the model mistakenly interprets biological variance as batch effect. Step 1: Isolate the signal. Re-run the correction, withholding the key sample group as a reference. If the signal disappears in the corrected data, it's likely being modeled as batch. Step 2: Adjust the model. Use ComBat-seq with the `model option, supplying your sample phenotype matrix. This anchors the biological groups, preventing their removal. Step 3: Consider a milder method likeHarmonyorlimma's removeBatchEffect` with partial adjustment.

Q3: The batch detection pipeline fails on my low-yield RNA-seq data, citing "insufficient variance." What parameters can I adjust? A: Low-yield data (e.g., single-cell or low-input bulk) has high technical noise. Protocol Adjustment:

- Feature Selection: Switch from using all genes to the top 2,000 highly variable genes (HVGs) for PCA input. Use

Seurat'sFindVariableFeaturesorscanpy'spp.highly_variable_genes. - AQS Threshold: Manually lower the AQS failure threshold (e.g., from the default Z<-2 to Z<-1.5) after visually inspecting PCA plots of positive controls.

- Quality Metric: Supplement the AQS with a sample-level QC metric like

percent mitochondrial reads. A sudden shift in this metric between batches can confirm a technical issue.

Q4: I am integrating data from two different sequencing platforms (e.g., Illumina NovaSeq and HiSeq). Which correction method is most robust? A: Platform differences introduce strong, non-linear batch effects. The recommended workflow is:

- Detection: AQS will typically flag this. Confirm with a

density plotof logCPM values per batch. - Correction: Use a non-linear or adversarial method.

- Option A (Neural Network):

scGenorANOVA-based DAE(Denoising Autoencoder). - Option B (Harmony): Apply

Harmonyon the top 50 principal components. - Key Step: Always preserve a hold-out dataset from one platform to validate that biological clusters merge post-correction without signal loss.

- Option A (Neural Network):

Table 1: Performance Comparison of Batch Correction Methods on Simulated Low-Yield RNA-seq Data

| Method | Correction Strength (Median) | Biological Signal Preservation (F1-Score) | Runtime (minutes) | Recommended Use Case |

|---|---|---|---|---|

| ComBat-seq | 0.92 | 0.85 | 3 | Strong, linear batch effects. |

| Harmony | 0.88 | 0.91 | 8 | Complex, non-linear effects; multi-platform. |

| limma removeBatchEffect | 0.79 | 0.94 | <1 | Mild batch effects; priority on signal. |

| sva (svaseq) | 0.85 | 0.88 | 5 | When batch covariates are unknown. |

| BERMUDA (Autoencoder) | 0.90 | 0.89 | 25 | Very large, heterogeneous datasets. |

Note: Simulation based on 100 samples (5 batches, 2 biological groups), 15M reads/sample average. Strength measured as reduction in batch variance (0-1 scale).

Table 2: Impact of Automated Quality Score (AQS) Threshold on Batch Detection Accuracy

| AQS Threshold (Z-score) | False Positive Rate (%) | False Negative Rate (%) | Recommended Action |

|---|---|---|---|

| -3.0 (Very Strict) | 1.2 | 18.5 | Risk missing subtle batches; use for high-quality data. |

| -2.0 (Default) | 5.0 | 7.3 | General purpose for standard yield RNA-seq. |

| -1.5 (Sensitive) | 12.7 | 3.1 | Recommended for low-yield data. Requires manual review. |

| -1.0 (Very Sensitive) | 24.5 | 0.9 | Generates many flags; only for pilot studies. |

Experimental Protocols

Protocol 1: Automated Quality Score (AQS) Calculation and Batch Detection Purpose: To algorithmically detect the presence of significant technical batch effects in an RNA-seq dataset. Input: Normalized count matrix (e.g., log2(CPM+1)), sample metadata (batch, phenotype), list of negative control genes. Procedure:

- Feature Selection: Subset the matrix to the negative control genes. These should exhibit minimal biological variation.

- Dimensionality Reduction: Perform Principal Component Analysis (PCA) on the subset matrix.

- Batch Association Test: For the top 5 PCs, perform a linear model test (e.g., ANOVA) associating each PC with the known batch covariate. Record the p-value.

- Score Calculation: Transform the p-values via -log10(p). Sum the transformed values for PCs 1-5. This sum is the raw batch association score (BAS).

- Standardization: Using a null distribution of BASs (generated from 1000 permutations where batch labels are randomly shuffled), convert the observed BAS to a Z-score. This Z-score is the Automated Quality Score (AQS).

- Decision: Flag the dataset for correction if AQS < -2.0 (or a user-defined threshold).

Protocol 2: ComBat-seq Batch Correction with Biological Covariate Protection Purpose: To remove batch effects while preserving specified biological group signals. Input: Raw count matrix, batch covariate vector, biological group covariate vector. Procedure:

- Model Specification: Use the

model.matrix()function in R to create a design matrix that includes the biological group of interest (e.g.,~ disease_state). - ComBat-seq Execution: Run the ComBat-seq function (

svapackage) with the following key arguments:counts = raw_count_matrixbatch = batch_vectorgroup = biological_group_vector(Optional, for advanced anchoring)covar_mod = design_matrix(Critical: This tells the model what variation to preserve).full_mod = TRUE(Uses the full design matrix for preservation).

- Output: A batch-corrected raw count matrix, suitable for downstream differential expression analysis.

Visualizations

AQS Calculation and Decision Workflow

ComBat-seq with Covariate Protection Pathway

The Scientist's Toolkit

Table 3: Research Reagent & Computational Solutions for Batch Effect Management

| Item / Resource | Function / Purpose | Example / Note |

|---|---|---|

| Negative Control Gene Set | Provides a stable baseline for algorithmic batch detection. | Use tissue-specific lists from EBI Expression Atlas or HKgenes R package. |

| sva Package (v3.48.0+) | Contains the ComBat_seq function for count-based batch correction. |

Critical for low-count RNA-seq; covar_mod argument protects biological signals. |

| Harmony (R/Python) | Integration algorithm for complex, non-linear batch effects. | Effective for cross-platform data (NovaSeq vs. HiSeq). Returns corrected embeddings. |

| Trimmed Mean of M (TMM) | Normalization method for library size differences. | Applied via edgeR::calcNormFactors. Prerequisite for AQS on raw counts. |

| Scater / Scran Pipeline | Provides modelGeneVar for HVG selection in low-yield contexts. |

More robust than simple variance ranking for noisy data. |

| Berkeley BISP Website | Repository for the BERMUDA adversarial learning correction tool. | For advanced, non-linear correction in large-scale integrative studies. |

| FastQC + MultiQC | Initial QC to rule out sequencing artifacts before batch analysis. | Confounds like adapter contamination must be ruled out first. |

| UMAP Visualization | Non-linear dimensionality reduction to visually assess correction success. | Use after correction to check cluster mixing and biological separation. |

Troubleshooting Guides & FAQs

FAQ 1: My batch-corrected data shows increased correlation between known biological groups. Is this expected or an artifact?

- Answer: This is a common and often expected outcome when batch effects are severe and obscuring true biological signal. A successful correction should increase the correlation within biological replicates and distinct groups (e.g., treated vs. control) by removing non-biological, batch-driven variance. However, you must validate this is not an over-correction artifact. Check by:

- PCA Plots: Verify that samples cluster primarily by biology, not by batch, post-correction.

- Negative Controls: Ensure known negative control samples (e.g., from the same condition) do not artificially separate.

- Preserved Variance: Use metrics like the Preservation of Biological Variance score from the

pvcaR package to quantify this.

FAQ 2: After using ComBat-seq, my gene expression matrix contains zeros or negative numbers. How should I proceed with downstream analysis (e.g., DEG with DESeq2)?

- Answer: ComBat-seq models count data directly and outputs corrected counts, which should be non-negative integers. Negative numbers or decimals suggest an issue. Follow this protocol:

- Re-check Input: Ensure your input to ComBat-seq (

svaR package) is a raw count matrix (integer). Do not use log-transformed or normalized data. - Rounding: The function has a

roundargument. Setround=TRUEto obtain integers. - Zero Handling: If zeros remain, this is normal for count data. DESeq2 can handle zero-inflated data. Do not replace zeros arbitrarily.

- Actionable Step: Re-run ComBat-seq with:

corrected_counts <- ComBat_seq(counts, batch=batch, group=group, round=TRUE)

- Re-check Input: Ensure your input to ComBat-seq (

FAQ 3: In low-yield RNA-seq data, batch correction removes too much signal, and my differential expression list becomes empty. What are my options?

- Answer: Low-yield data has high technical noise, making batch effect separation difficult. Implement a stepped approach:

- Softer Correction First: Use

limma::removeBatchEffect()on log-CPM values. This adjusts data for batch effects but does not remove all batch-associated variance, preserving more potential biological signal. - Batch-Aware Modeling: In your differential expression tool, include

batchas a covariate in the design formula (e.g.,~ batch + conditionin DESeq2 or limma). This is often more conservative. - Prioritization: Use an RUV-based method (

RUVSeq), which can leverage negative control genes (e.g., housekeeping genes you know should not change) to estimate and remove unwanted variation more precisely.

- Softer Correction First: Use

FAQ 4: How do I choose between ComBat, limma removeBatchEffect, and RUV for my specific dataset?

- Answer: The choice depends on your data structure and the strength of the batch effect. Use this decision guide:

| Method (Package) | Best For | Input Data Type | Key Requirement | Risk of Over-correction |

|---|---|---|---|---|

ComBat / ComBat-seq (sva) |

Strong, multi-level batch effects. | ComBat: Normalized log-data. ComBat-seq: Raw counts. | Sufficient replicates per batch. | Moderate-High |

removeBatchEffect (limma) |

Mild-moderate batch effects; exploratory analysis. | Log2-normalized expression values. | Linear model assumption. | Low |

RUV (RUVSeq, RUVcorr) |

Low-yield/data with high noise; no good batch model. | Raw counts or normalized data. | List of negative control genes/samples. | Variable (depends on controls) |

Detailed Protocol: Implementing and Validating ComBat-seq for Low-Yield Data

- Step 1: Quality Control & Pre-filtering.

- Load raw count matrix and metadata with batch and biological group information.

- Filter lowly expressed genes:

keep <- rowSums(counts >= 5) >= min_sample_size(e.g., minsamplesize = smallest group size). - Apply filter:

counts.filtered <- counts[keep,].

- Step 2: Apply ComBat-seq Correction.

- Install and load the

svapackage. - Run correction:

corrected.counts <- ComBat_seq(counts.filtered, batch = metadata$batch, group = metadata$condition, full_mod = TRUE, round = TRUE). - The

groupparameter is crucial—it protects biological variation of interest.

- Install and load the

- Step 3: Validation of Correction.

- Perform PCA on log2(corrected.counts + 1) and color points by batch and by condition.

- Calculate Average Silhouette Width by batch (should decrease) and by condition (should increase or stay stable) post-correction.

- Quantify with Percent Variance Explained by batch before/after correction using a simple ANOVA model.

The Scientist's Toolkit: Key Research Reagent Solutions

| Item | Function in Batch Effect Mitigation |

|---|---|

| ERCC RNA Spike-In Mixes | Exogenous controls added prior to library prep to monitor technical variation across batches. |

| UMI (Unique Molecular Index) Adapters | Allows correction for PCR amplification bias, a major source of batch noise in low-input protocols. |

| Commercial Low-Input/ Single-Cell Library Kits | Standardized, optimized reagents reduce protocol-driven batch effects compared to in-house methods. |

| Inter-Plate Calibration Samples | Aliquots from a single, large-quality RNA sample run on every plate/sequencing batch for direct cross-batch normalization. |

| Nuclease-Free Water (from single lot) | Consistent water source prevents RNase and metal ion contamination that can create batch-specific inhibition. |

Workflow & Relationship Diagrams

Title: Bulk RNA-seq Batch Correction Decision Workflow

Title: Observed Data as Sum of Signal and Noise

Troubleshooting Guides & FAQs

Q1: My low-yield dataset has many zero counts after pre-processing. Is ComBat-seq suitable for this, or should I use ComBat-ref? A: ComBat-ref is explicitly designed for this scenario. Standard ComBat-seq requires raw count data and struggles with excessive zeros. ComBat-ref allows you to leverage a robust, high-quality reference batch to stabilize the correction for your low-yield study batch. Proceed with ComBat-ref.

Q2: I receive a "Error in solve.default(t(design) %*% design) : system is computationally singular" message. What does this mean? A: This indicates perfect multicollinearity in your design matrix. In the context of batch correction, it often means your batch variable is confounded with another covariate (e.g., all samples from Batch A are also from Treatment Group 1). You must:

- Review your experimental design.

- Remove the confounded covariate from the model formula in the ComBat-ref call. You can only correct for batch while preserving variation from covariates that vary within batches.

Q3: After applying ComBat-ref, my PCA plot shows improved batch mixing, but the expression values for some genes are negative. Is this expected? A: Yes. ComBat-ref returns normalized, batch-adjusted expression values on a continuous scale, which can include negative numbers. This is standard for this type of parametric adjustment. For downstream analyses requiring positive values or counts (e.g., some differential expression tools), you may need to transform the data (e.g., adding a constant) or use tools compatible with continuous data.

Q4: How do I choose an appropriate reference batch for my low-yield data? A: The reference batch must be of high quality. Follow this protocol:

- Identify Candidate: Select a batch from your study or public data with high sequencing depth, high RNA quality (RIN > 8), and large sample size (n > 20).

- Validate: Perform PCA on the candidate batch alone. It should show tight clustering by biological group, not technical artifacts.

- Check Compatibility: Ensure the reference batch contains the same biological conditions or cell types as your low-yield study batch. Reference and study batches must be profiled on the same platform.

Key Experimental Protocol: Applying ComBat-ref to Low-Yield RNA-seq Data

Methodology:

- Data Preparation: Start with raw count matrices for both the Reference Batch (high-yield) and the Study Batch (low-yield). Filter out genes with zero counts across all samples. Perform a minimal count transformation:

log2(count + 1). - Data Merging: Combine the two matrices, keeping track of batch labels (e.g., "Ref" and "Study").

- ComBat-ref Execution: Use the

combatreffunction from theComBatRefR package (v1.0+).

- Post-Correction Validation: Generate PCA plots pre- and post-correction. Calculate the Average Silhouette Width by batch; it should decrease post-correction.

Data Presentation: Performance Metrics for ComBat-ref on Simulated Low-Yield Data

Table 1: Comparison of Batch Effect Correction Methods on Low-Yield Data (n=5 per batch)

| Method | Mean ASW (Batch)* | Biological Variance Preserved (%) | Computation Time (s) |

|---|---|---|---|

| No Correction | 0.82 | 100 | N/A |

| Standard ComBat-seq | Failed | N/A | N/A |

| ComBat-ref | 0.12 | 98.5 | 45 |

| Limma removeBatchEffect | 0.35 | 95.2 | 12 |

*ASW (Average Silhouette Width): Ranges from -1 to 1; values closer to 0 indicate better batch mixing.

Visualizations

Diagram 1: ComBat-ref Workflow for Low-Yield Data

Diagram 2: Troubleshooting Logic for Common ComBat-ref Errors

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Reliable ComBat-ref Analysis

| Item | Function in Experiment | Example/Specification |

|---|---|---|

| High-Quality Reference RNA-seq Dataset | Provides the stable expression profile to anchor the correction of the low-yield batch. | In-house control cohort (n>20, RIN>8) or curated public dataset (e.g., GTEx). |

| ComBatRef R Package | Implements the ComBat-ref algorithm for batch correction with a specified reference. | Version 1.0 or higher from Bioconductor/GitHub. |

| RNA Isolation Kit for Low Input | To maximize yield from precious/scarce samples for the study batch. | Takara Bio SMARTer kit, NuGEN Ovation. |

| UMI-based Library Prep Kit | Reduces technical noise (PCR duplicates) critical in low-yield data. | 10x Genomics Chromium, Parse Biosciences kit. |

| R/Bioconductor Packages for QC | Assesses data quality pre- and post-correction. | scater, DEGreport, sva. |

Troubleshooting Batch Correction: Avoiding Over-Correction and Signal Loss

Technical Support Center

Troubleshooting Guides & FAQs

Q1: After batch effect correction on my low-yield RNA-seq dataset, the PCA plot still shows strong clustering by batch. What are the primary reasons and solutions?

A: Persistent batch clustering after standard correction (e.g., ComBat) often indicates that batch effects are confounded with biological signal or are non-linear. In low-yield data, amplified technical noise can overwhelm correction algorithms.

- Actionable Steps:

- Diagnose: Use the